The Structures of Computation and the Mathematical Structure of Nature

Michael S. Mahoney

Introduction

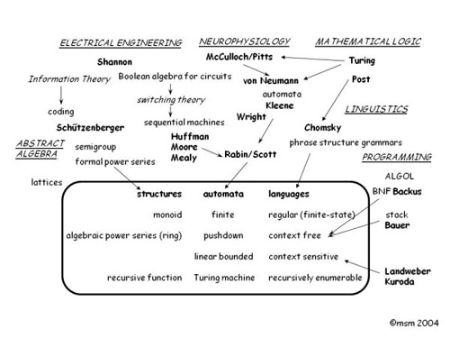

In 1948 John von Neumann expressed the need for a theory of automata to take account of the possibilities and limits of the electronic digital computer, but he could not specify the mathematical form of such a theory might take. Over the next two decades, theoretical computer science emerged from the intersection of the research agendas of a wide range of established fields, including mathematics. By 1970 theoretical computer science had its own rubric in Mathematical Reviews, its own textbooks, and its own research agenda, and its concepts and techniques were becoming a resource for other sciences. The talk will describe the main lines of this process, which took place in two stages corresponding to a continuing division in the subject. Beginning in the late 1950s, automata theory took shape as the quasi-algebraic study of formal languages and the machines that recognize them, and of computational complexity as measured by bounded Turing machines. From the early 1960s formal semantics grew out of concern with extensible programming languages, which brought out in striking form the defining characteristic of the stored-program computer, namely that functions and values occupy the same data space. In the process, theoretical computer science gave meaning to the seemingly paradoxical notion of ‘applied abstract algebra’, as it brought the most advanced concepts of twentieth-century mathematics to bear on what has become the defining technology of our time.

In 1984 Springer Verlag added to its series, Undergraduate Texts in Mathematics, a work with the seemingly oxymoronic title Applied Abstract Algebra, by Rudolf Lidl and Gunter Pilz. To the community of practitioners the work may have seemed, as the cliché puts it, ‘long overdue’; indeed, it was one of a spate of similar works that appeared at the time, evidently filling a perceived need. To the historian of modern mathematics, it appears remarkable on several counts, not least that it was meant for undergraduates. Less than fifty years earlier, Garrett Birkhoff's father had asked him what use his new theory of lattices might possibly have, and many non-algebraists had wondered the same thing about the subject as a whole. Applied Abstract Algebra opens with a chapter on lattices, which form a recurrent theme throughout the book. Subsequent chapters present rings, fields, and semigroups. Interspersed among those topics and motivating their organization are the applications that give the book its title and purpose. They include switching circuits, codes, cryptography, automata, formal languages: in short, problems arising out of computing or intimately related to it.

Although the particular topics and treatments differed, Lidl and Pilz were following the lead of Birkhoff himself, who in 1970 together with Thomas Bartee had assembled Modern Applied Algebra, and explained its purpose right at the outset:

The name ‘modern algebra’ refers to the study of algebraic systems (groups, rings, Boolean algebras, etc.) whose elements are typically non-numerical. By contrast, ‘classical’ algebra is basically concerned with algebraic equations or systems of equations whose symbols stand for real or complex numbers. Over the past 40 years, ‘modern’ algebra has been steadily replacing ‘classical’ algebra in American college curricula.1

The past 20 years have seen an enormous expansion in several new areas of technology. These new areas include digital computing, data communication, and radar and sonar systems. Work in each of these areas relies heavily on modern algebra. This fact has made the study of modern algebra important to applied mathematicians, engineers, and scientists who use digital computers or who work in the other areas of technology mentioned above.2

That is, by 1970 the most abstract notions of twentieth-century mathematics had evidently found application in the most powerful technology of the era, indeed the defining technology of the modern world.3 Indeed, the development of computing since 1970, both theoretical and applied, has repeatedly reinforced the relationship, as one can see from titles like The Algebra of Programming; Categories, Types, and Structures: An Introduction to Category Theory for the Working Computer Scientist; and Basic Category Theory for Computer Scientists, to name just a few.

The problem of applied science and applied mathematics

That strikes me as a remarkable development, all the more so for a discussion that followed a paper I gave a couple of years ago at a conference on the history of software. Computer scientists and software engineers in the audience objected to my talking about the development of theoretical computer science as a mathematical discipline under the title ‘Software as Science–Science as Software’.4 The matters at issue were not technical but professional, philosophical, and historical. My critics disagreed with me—and with each other—over whether software (i.e. computer programs and programming) could be the subject of a science, whether that science was mathematics, and whether mathematics could be considered a science at all.

There was much common wisdom in the room, expressed with the certainty and conviction that common wisdom affords. Two arguments in particular are pertinent here. First, the computer is an artifact, not a natural phenomenon, and science is about natural phenomena. Second, as a creation of the human mind, independent of the physical world, mathematics is not a science. It is at most a tool for doing science.

Modern technoscience undercuts the first point. How does one distinguish between nature and artifact when we rely on artifacts to produce or afford access to the natural phenomena, as with accelerators, electron microscopes, and space probes—all, one might add, mediated by software?5

We need not wait until the twentieth century to find technology intertwined with science. In insisting that ‘Nature to be commanded must be obeyed,’ Francis Bacon placed nature and art on the same physical and epistemological level.6 Artifacts work by the laws of nature, and by working to reveal those laws. Only with the development of thermodynamics, which began with the analysis of steam engines already at work, did we ‘discover’ that world is a heat engine subject to the laws of entropy. A century later came information theory, the analysis of communications systems arising from the problems of long-distance telephony, again already in place. Information could be related via the measure of entropy to thermodynamics, and the phenomenal world began to contain information as a measure of its order and structure. According to Stephen Hawking, quantum mechanics, relativity, thermodynamics, and information theory all meet on the event horizons of black holes. There's a lot of physics here, but also a lot of mathematics, and a lot of artifacts lying behind both.

Now, with the computer, nature has increasingly become a computation. DNA is code, the program for the process of development; the growth of plants follows the recursive patterns of formal languages, and biochemical molecules implement automata.7 Stephen Wolfram’s A New Kind of Science is only the most extreme assertion of an increasingly widespread view, expressed with equal fervor by proponents of Artificial Life. Although the computational world may have begun as a metaphor, it is now acquiring the status of metaphysics, thus repeating the early modern transition from the metaphor of ‘machine of the world’ to the metaphysics of matter in motion. The artifact as conceptual scheme is deeply, indeed inseparably, embedded in nature, and the relationship works both ways, as computer scientists turn to biological models to address problems of stability, adaptability, and complexity.8

Embedded too is the mathematics that has played a central role in the articulation of many of these models of nature—thermodynamical, informational, and computational—not simply by quantifying them but also, and more importantly, by capturing their structure and even filling it out. The calculus of central-force dynamics revealed by differentiation the Coriolis force before Coriolis identified it, and Gell-Mann's and Ne'eman's Ω baryon served as the tenth element needed to complete a group representation before it appeared with its predicted properties in the Brookhaven accelerator.9 One can point to other examples in between. Applied to the world as models, mathematical structures have captured its workings in ways thinkers since the 17th century have found uncanny or, as Eugene Wigner put it, ‘unreasonably effective’.10

Is mathematics a science of the natural world, or can it be? Computational science puts the question in a new light. Science is not about nature, but about how we represent nature to ourselves. We know about nature through the models we build of it, constructing them by abstraction from our experience, manipulating them physically or conceptually, and testing their implications back against nature. How we understand nature reflects, first, the mapping between the observable or measurable parameters of a physical system and the operative elements of the model and, second, our capacity to analyze the structure of the model and the transformations of which is capable. In the physical sciences, the elements have been particles in motion or distributions of force in fields described in differential equations; in the life sciences, they have been organisms arranged in a taxonomy or gathered in statistically distributed populations. The scope and power of these models have depended heavily on the development of mathematical techniques to analyze them and to derive new structures and relations within them. Developed initially as a tool for solving systems of equations that were otherwise intractable, computers have evolved to provide a means for a new kind of modeling and thus a new kind of understanding of the world, naming dynamic simulation, which is what we generally mean when we talk of ‘computational science’. But with the new power come new problems, first, to define the mapping that relates the operative elements of the simulation to what we take to be those of the natural system and, second, to develop the mathematical tools to analyze the dynamic behavior of computational processes and to relate their structures to one another.

In short, understanding nature appears to be coming down to understanding computers and computation. Whether computer science, broadly conceived, should be or even could be a wholly mathematical science remains a matter of debate among practitioners, on which historians must be agnostic. What matters to this historian is that from the earliest days, leading members of the community of computer scientists, as measured by citations, awards, and honors, have turned to mathematics as the foundation of computer science. I am not stating a position but reporting a position taken by figures who would seem to qualify as authoritative. A look at what current computing curricula take to be fundamental theory confirms that position, and developments in computational science reinforce it further.

So, let me repeat my critics’ line of thinking: computation is what computers do, the computer is an artifact, and computation is a mathematical concept. So how could computation tell us anything about nature? Or rather, to take the historian’s view, how has it come about that computation is both a mathematical subject and an increasingly dominant model of nature? And how has that parallel development redefined the relations among technology, mathematics, and science?

Applied mathematics and its history

The answer is tied in part to a shift in the meaning of ‘applied mathematics’ that took place during the mid-20th century. It shifted from putting numbers on theory to modeling as abstraction of structure. The shift is related to Birkhoff’s differentiation between ‘classical’ and ‘modern’ algebra: the latter redirects attention from the objects being combined to the combinatory operations and their structural relations.11 Thus homomorphisms express the equivalence of structures arising from different operations, and isomorphism expresses the equivalence of structures differing only in the particular objects being combined. From functional analysis to category theory, algebra moved upward in a hierarchy of abstraction in which the relations among structures of one level became the objects of relations at the next. As Herbert Mehrtens has pointed out, mathematics itself was following in the direction Hilbert had indicated at the turn of the 20th century, beholden no longer to physical ontology or to philosophical grounding.12

The new kind of modeling followed that pattern and fitted well with concurrent shifts in the relation between mathematics and physics occasioned by the challenges of relativity and quantum mechanics, which pushed mathematical physics onto new ground, moving it from the mathematical expression of physical structures to the creation of mathematical structures open to, but not bound by, physical interpretation. A shift of technique accompanied the shift of ground. As Bruna Ingrao and Giorgio Israel observe in their history of equilibrium theory in economics,

... one went from the theory of individual functions to the study of the ‘collectivity’ of functions (or functional spaces); from the classical analysis based on differential equations to abstract functional analysis, whose techniques referred above all to algebra and to topology, a new branch of modern geometry. The mathematics of time, which had originated in the Newtonian revolution and developed in symbiosis with classical infinitesimal calculus, was defeated by a static and atemporal mathematics. A rough and approximate description of the ideal of the new mathematics would be ‘fewer differential equations and more inequalities’. In fact, the ‘new’ mathematics, of which von Neumann was one of the leading authors, was focused entirely upon techniques of functional analysis, measurement theory, convex analysis, topology, and the use of fixed-point theorems. One of von Neumann's greatest achievements was unquestionably that of having grasped the central role within modern mathematics of fixed-point theorems, which were to play such an important part in economic equilibrium theory.13

Ingrao and Israel echoed what Marcel P. Schützenberger (about whom more below) had earlier signaled as the essential achievement of John von Neumann’s theory of games, which he characterized as ‘the first conscious and coherent attempt at an axiomatic study of a psychological phenomenon’.

In von Neumann’s theory there is no reference to a physical substrate of which the equations will serve to symbolize the mechanism, nor are there introduced any but the minimum of psychological or sociological observations, which more or less fragmentary analytic schemes will seek to approximate. On the contrary, from the outset, the most general postulates possible, chosen certainly on the basis of extra-mathematical considerations that legitimate them a priori, but accessible in a direct way to mathematical treatment. These are mathematical hypotheses with psychological content—not mathematizable psychological data.14

Such an approach, Schützenberger added, required that von Neumann fashion new analytical tools from abstract algebra, including group theory and the theory of binary relations, thus making his application of mathematics to psychology at the same time ‘a new chapter in mathematics’. The physical world thus became an interpretation, or semantic model, of an essentially uninterpreted, abstract mathematical structure.

Von Neumann’s agendas: The formation of theoretical (mathematical) computer science

John von Neumann first turned to the computer as a tool for applied mathematics in the traditional sense. Numerical approximation had to suffice for what analysis could not provide when faced with non-linear partial differential equations encountered in hydrodynamics. But he recognized that the computer could be more than a high-speed calculator. It allowed a new form of scientific modeling, and it constituted an object of scientific inquiry in itself. That is, it was not only a means of doing applied mathematics of the traditional sort but a medium for applied mathematics of a new sort. Hence, his work on the computer took him in two directions, namely numerical analysis for high-speed computation (what became scientific computation) and the theory of automata as ‘artificial organisms’.15

In proposing new lines of research into automata and computation, von Neumann knew that it would require new mathematical tools, but he was not sure of where to look for those tools.

This is a discipline with many good sides, but also with certain serious weaknesses. This is not the occasion to enlarge upon the good sides, which I certainly have no intention to belittle. About the inadequacies, however, this may be said: Everybody who has worked in formal logic will confirm that it is one of the technically most refractory parts of mathematics. The reason for this is that it deals with rigid, all-ornone concepts, and has very little contact with the continuous concept of the real or of the complex number, that is, with mathematical analysis. Yet analysis is the technically most successful and best-elaborated part of mathematics. Thus formal logic is, by the nature of its approach, cut off from the best cultivated portions of mathematics, and forced onto the most difficult part of the mathematical terrain, into combinatorics.

The theory of automata, of the digital, all-or-none type, as discussed up to now, is certainly a chapter in formal logic. It will have to be, from the mathematical point of view, combinatory rather than analytical.16

At the time von Neumann wrote this, there were two fields of mathematics that dealt with the computer: mathematical logic and the Boolean algebra of circuits. The former treated computation at its most abstract and culminated with what could not be computed. The latter dealt with how to compute at the most basic level. Von Neumann was looking for something in the middle, and that is what emerged over the next fifteen years. It came, as von Neumann suspected, from combinatory mathematics rather than from analysis, although not entirely along the lines he expected. His own research focused on ‘growing’ automata (in the form of cellular automata) and the question of self-replication, a line of inquiry picked up by Arthur Burks and his Logic of Computers Group at the University of Michigan. This is an important subject still awaiting a historian.

The story of how the new subject assumed an effective mathematical form is both interesting and complex, and I have laid it out in varying detail in several articles.17 Let me here use a couple of charts to describe the main outline and to highlight certain relationships, among them what I call a convergence of agendas.

Agendas

At the heart of a discipline lies an agenda, a shared sense among its practitioners of what is to be done: the questions to be answered, the problems to be solved, the priorities among them, the difficulties they pose, and where the answers will lead. When one practitioner asks another, ‘What are you working on?’, it is their shared agenda that gives professional meaning to both the question and the response. Learning a discipline means learning its agenda and how to address it, and one acquires standing by solving problems of recognized importance and ultimately by adding fruitful questions and problems to the agenda. Indeed, the most significant solutions are precisely those that expand the agenda by posing new questions.18 Disciplines may cohere more or less tightly around a common agenda, with subdisciplines taking responsibility for portions of it. There may be some disagreement about problems and priorities. But there are limits to disagreement, beyond which it can lead to splitting, as in the separation of molecular biology from biochemistry and from biology.

Most important for present purposes, the agendas of different disciplines may intersect on what come to be recognized as common problems, viewed at first from different perspectives and addressed by different methods but gradually forming an autonomous agenda with its own body of knowledge and practices specific to it. Theoretical computer science presents an example of how such an intersection of agendas generated a new agenda and with it a new mathematical discipline.

Automata and formal languages

As the first chart suggests, the theory of automata and formal languages resulted from the convergence of a range of agendas on the common core of the correspondence between the four classes of Noam Chomsky's phrase-structure grammars and four classes of finite automata. To the first two classes correspond as well algebraic structures which capture their properties and behavior. The convergence occurred in several stages. First, the deterministic finite automaton took form as the common meeting ground of electrical engineers working on sequential circuits and of mathematical logicians concerned with the logical capabilities of nerve nets as originally proposed by Warren McCulloch and Walter Pitts and developed further by von Neumann.19 Indeed, Stephen Kleene's investigation of the McCulloch-Pitts paper was informed by his work at the same time on the page proofs for von Neumann's General and Logical Theory of Automata, which called his attention to the finite automaton. E.F. Moore's paper on sequential machines and Kleene's on regular events in nerve nets appeared together in Automata Studies in 1956, although both had been circulating independently for a time before that.20 Despite their common reference to finite automata, the two papers took no account of each other. They were brought together by Michael Rabin and Dana Scott in their seminal paper on ‘Finite Automata and Their Decision Problems’, which drew a correspondence between the states of a finite automaton and the equivalence classes of the sequences recognized by them.21

These initial investigations were couched in a combination of logical notation and directed graphs, which suited their essentially logical concerns. Kleene did suggest at the end of his article that ‘the study of a set of objects a , ..., a under a binary relation R, which is at the heart of the above proof [of his main theorem], might profitably draw on some algebraic theory.‘22 Rabin and Scott in turn mentioned that the concatenated tapes of an automaton form a free semigroup with unit. But neither of the articles pursued the algebra any farther. The algebraic theory came rather from another quarter. In 1956 at the seminal Symposium on Information Theory held at MIT, Marcel P. Schützenberger contributed a paper, ‘On an Application of Semi-Group Methods to Some Problems in Coding’.23 There he showed that the structure of the semigroup captured the essentially sequential nature of codes in transmission, and the semigroup equivalent of the ‘word problem’ for groups expressed the central problem of recognizing distinct subsequences of symbols as they arrive. The last problem in turn is a version of the recognition problem for finite automata as defined and resolved by Kleene and then Rabin and Scott. Turning from codes to automata in a series of papers written between 1958 and 1962, Schützenberger established the monoid, or semigroup with unit, as the fundamental mathematical structure of automata theory, demonstrating that the states of a finite-state machine, viewed as a homomorphic image of the equivalence classes of strings indistinguishable by the machine, form a monoid and that the subset of final states is a closed homomorphic image of the strings recognized by the machine. Reflecting the agenda of Bourbaki's algebra, he then moved from monoids to a semi-rings by expressing the sequences recognized by an automaton as formal power series in non-commutative variables. On analogy with power series in real variables, he distinguished among ‘rational’ and ‘algebraic’ power series and explored various families of the latter.24 Initially he identified ‘rational’ power series with finite automata, and hence finite-state languages, but could point to no language or machine corresponding to the ‘algebraic’ series.

That would come through his collaboration with linguist Noam Chomsky, who at the same 1956 symposium introduced his new program for mathematical linguistics with a description of ‘Three models of language’ (finite-state, phrase-structure, and transformational).25 Chomsky made his scientific goal clear at the outset:

The grammar of a language can be viewed as a theory of the structure of this language. Any scientific theory is based on a certain finite set of observations and, by establishing general laws stated in terms of certain hypothetical constructs, it attempts to account for these observations, to show how they are interrelated, and to predict an indefinite number of new phenomena. A mathematical theory has the additional property that predictions follow rigorously from the body of theory.26

Following work with George Miller on finite-state languages viewed as Markov sequences, Chomsky turned in 1959 to those generated by more elaborate phrase-structure grammars, the context-free, context-sensitive, and recursively enumerable languages.27 The technique here stemmed from the production systems of Emil Post by way of Paul Rosenbloom’s treatment of concatenation algebras.28 Of these, the context-free languages attracted particular interest for both theoretical and practical reasons. Theoretically, Schützenberger determined in 1961 that they corresponded to his algebraic power series.29 Practically, Ginsburg and Rice showed that the syntax of portions of the newly created programming language Algol constituted a context-free grammar.30 They did so by transforming the Backus Normal Form31 description of those portions into set-theoretical equations among monotonically increasing functions forming a lattice and hence, by Alfred Tarski’s well known result, having a minimal fixed point, which constituted the language generated by the grammar.32 At the same time, Anthony Oettinger brought together a variety of studies on machine translation and syntactic analysis converging on the concept of the stack, or last-in-first-out list, also featured in the work of Klaus Samelson and Friedrich Bauer on sequential formula translation.33Added to a finite automaton as a storage tape, it formed the pushdown automaton. In 1962, drawing on Schützenberger’s work on finite transducers, Chomsky showed that the context-free languages were precisely those recognized by a non-deterministic pushdown automaton.34 Their work culminated in a now-classic essay, ‘The algebraic theory of context-free languages’, and in Chomsky’s chapter on formal grammars in the Handbook of Mathematical Psychology.35

By the mid-1960s, Schützenberger’s work had established the monoid and formal power series as the fundamental structures of the mathematical theory of automata and formal languages, laying the foundation for a rapidly expanding body of literature on the subject and, as he expected of the axiomatic method, for a new field of mathematics itself. As his student, Dominique Perrin, has noted, the subsequent development of the subject followed two divergent paths, marked on the one hand by works such as Samuel Eilenberg’s Automata, Languages, and Machines (‘la version la plus mathématisée des automates’) and on the other by the textbooks of A.V. Aho, John Hopcroft, and Jeffrey Ullman, in which the theory is adapted to the more practical concerns of practitioners of computing.36 In that they follow the ‘personal bias’ of Rabin, who opined in 1964 that

...whatever generalization of the finite automaton concept we may want to consider, it ought to be based on some intuitive notion of machines. This is probably the best guide to fruitful generalizations and problems, and our intuition about the behavior of machines will then be helpful in conjecturing and proving theorems.37

The subject would be fully mathematical, but it would retain its roots in the artifact that had given rise to it.

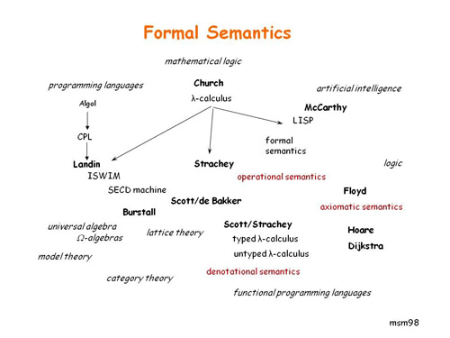

Formal semantics

From a mathematical point of view, formal semantics is the story of how the lambda calculus acquired a mathematical structure and of how universal algebra and category theory became useful in analyzing its untyped and typed forms, respectively. The story begins, by general acknowledgment, with the work of John McCarthy on the theoretical implications of LISP, as set forth in his 1960 article ‘Recursive Functions of Symbolic Expressions and their Computation by Machine’ and then in two papers, ‘A Basis for a Mathematical Theory of Computation’ and ‘Towards a Mathematical Science of Computation’.38 In the article, he outlined how the development of LISP had led to a machine-independent formalism for defining functions recursively and to a universal function that made the formalism equivalent to Turing machines. To express functions as object in themselves, distinct both from their values and from forms that constitute them, McCarthy turned to Alonzo Church's λ-calculus, preferring its explicitly bound variables to Haskell Curry's combinatory logic, which avoided them only at the price of ‘lengthy, unreadable expressions for any interesting combinations.’39 However, Church's system had its drawbacks, too. ‘The λ-notation is inadequate for naming functions defined recursively,’ McCarthy noted. To remedy the problem, he introduced (on the suggestion of Nathaniel Rochester) a notation label to signify that the function named in the body of the λ-expression was the function denoted by the expression. It was a stop-gap measure, and McCarthy returned to the problem in his subsequent articles. It proved to be the central problem of the λ-calculus approach to semantics.

The two articles that followed built on the system of symbolic expressions, in which McCarthy now saw the foundations for a mathematical theory of computation. In ‘Basis’ he set out some of the goals of such a theory and thus an agenda for the field.

It should aim

- to develop a universal programming language

- to define a theory of the equivalence of computational processes

- to represent algorithms by symbolic expressions in such a way that significant changes in the behavior represented by the algorithms are represented by simple changes in the symbolic expressions

- to represent computers as well as computations in a formalism that permits a treatment of the relation between a computation and the computer that carries out the computation

- to give a quantitative theory of computation ... analogous to Shannon's measure of information.40

The article focused on two of these goals, setting out the formalism of symbolic expressions and introducing the method of recursion induction as a means of determining the equivalence of computational processes.

In the second paper, presented at IFIP 62, McCarthy defined his goals even more broadly, placing his mathematical science of computation in the historical mainstream:

In a mathematical science, it is possible to deduce from the basic assumptions, the important properties of the entities treated by the science. Thus, from Newton's law of gravitation and his laws of motion, one can deduce that the planetary orbits obey Kepler's laws.

The entities of computer science, he continued, are ‘problems, procedures, data spaces, programs representing procedures in particular programming languages, and computers.’ Especially problematic among them were data spaces, for which there was no theory akin to that of computable functions; programming languages, which lacked a formal semantics akin to formal syntax; and computers viewed as finite automata. ‘Computer science,’ he admonished, ‘must study the various ways elements of data spaces are represented in the memory of the computer and how procedures are represented by computer programs. From this point of view, most of the current work on automata theory is beside the point.’41

Whereas the earlier articles had focused on theory, McCarthy turned here to the practical benefits of a suitable mathematical science. It would be a new sort of mathematical theory, reaching beyond the integers to encompass all domains definable computationally and shifting from questions of unsolvability to those of constructible solutions. It should ultimately make it possible to prove that programs meet their specifications, as opposed simply (or not so simply) to debugging them… There was no question in McCarthy’s mind that computing was a mathematical subject. The problem was that he did not yet have the mathematics. In particular, insofar as his formalism for recursive definition rested on the λ-calculus, it lacked a mathematical model.42

McCarthy's new agenda for the theory of computation did not enlist much support at home, but it struck a resonant chord in Europe, where work was underway on the semantics of Algol 60 and PL/1. Christopher Strachey had learned about the λ-calculus from Roger Penrose in 1958 and had engaged Peter J. Landin to look into its application to formal semantics. In lectures at the University of London Computing Unit in 1963, Landin set out ‘A λ-calculus approach’ to programming, aimed at ‘introduc[ing] a technique of semantic analysis and ... provid[ing] a mathematical basis for it’.43 Here Landin followed McCarthy's lead but diverged from it in several respects, in particular by replacing McCarthy's LISP-based symbolic expressions with ‘applicative expressions’ (AEs) derived directly from the λ-calculus but couched in ‘syntactic sugar’ borrowed from the new Combined Programming Language (CPL) that Strachey and a team were designing for new machines at London and Cambridge.44 More important, where McCarthy had spoken simply of a ‘state vector’, Landin articulated it into a quadruple (S,E,C,D) of lists and list-structures consisting of a stack, an environment, a control, and a dump, and specified in terms of an applicative expression the action by which an initial configuration is transformed into a final one.

But Landin was still talking about a notional machine, which did not satisfy Strachey, who embraced the λ-calculus for reasons akin to those of Church in creating it. Where Church sought to eliminate free variables and the need they created for an auxiliary, informal semantics, Strachey wanted to specify the semantics of a programming language formally without the need for an informal evaluating mechanism. In ‘Towards a Formal Semantics’, he sought to do so, and to address the problem of assignment and jumps, by representing the store of a computer as a set of ‘R-values’ sequentially indexed by a set of ‘L-values’.45 The concept (suggested by Rod Burstall) reflected the two different ways the components of an assignment are evaluated by a compiler: in X := Y, the X is resolved into a location in memory into which the contents of the location designated by Y is stored. Strachey associated with each L-value α two operators, C(α) and U(α), respectively denoting the loading and updating of the ‘content of the store’ denoted by σ.46 C(α) applied to σ yields the R-value β held in location α, and U(α) applied to the pair (β’, σ) produces a new σ’ with β’ now located at α. One can now think of a command as an operator θ that changes the content of the store by updating it. Grafting these operations onto Landin’s λ-calculus approach to evaluating expressions, Strachey went on to argue that one could then reason about a program in terms of these expressions alone, without reference to an external mechanism.

Strachey’s argument was a sketch, rather than a proof, and its structure involved a deep problem connected with the lack of a mathematical model for the λ-calculus, as Dana Scott insisted when he and Strachey met in Vienna in August 1969 and undertook collaborative research at Oxford in the fall. As Dana Scott phrased it, taking S as a set of states σ, L as the set of locations (i.e. L-values) l, and V as the set of (R-)values, one can rewrite the loading operator in the form σ(l) E V. A command ϒ is then a mapping of S into S. But ϒ is also located in the store and hence in V; that is ϒ = σ(l) for some l. Hence, the general form of a command may be written as ϒ(σ) = (σ(l))(σ), which lies ‘an insignificant step away from ... p(p)’. Scott continued:

To date, no mathematical theory of functions has ever been able to supply conveniently such a free-wheeling notion of function except at the cost of being inconsistent. The main mathematical novelty of the present study is the creation of a proper mathematical theory of functions which accomplishes these aims (consistently!) and which can be used as the basis for the metamathematical project of providing the ‘correct’ approach to semantics.47

The mathematical novelty, which took Scott by surprise, was finding that abstract data types and functions on them could be modeled as continuous lattices, which thereby constituted a model for the type-free λ-calculus; by application of Tarski’s theorem, the self-reference of recursive definitions, as represented by the Y-operator, resolved into the least fixed point of the lattice.

By the mid-1960s proponents of formal semantics had articulated two goals. First, they sought a metalanguage in which the meaning of programming languages could be specified with the same precision with which their syntax could be defined. That is, a compiler should be provably able to translate the operational statements of a language into machine code as unambiguously as a parser could recognize their grammar. Second, the metalanguage should allow the mathematical analysis of programs to establish such properties as equivalence, complexity, reducibility, and so on. As Rod Burstall put it in 1968,

The aims of these semantic investigations are twofold. First they give a means of specifying programming languages more precisely, extending the syntactic precision which was first achieved in the Algol report to murkier regions which were only informally described in English by the Algol authors. Secondly they give us new tools for making statements about programs, particularly for proving that two dissimilar programs are in fact equivalent, a problem which tends to be obscured by the syntactic niceties of actual programming languages.48

Ultimately, the two goals came down to one. ‘[I]n the end,’ argued Dana Scott in the paper just mentioned,

the program still must be run on a machine—a machine which does not possess the benefit of ‘abstract’ human understanding, a machine that must operate with finite configurations. Therefore, a mathematical semantics, which will represent the first major segment of the complete, rigourous definition of a programming language, must lead naturally to an operational simulation of the abstract entitites, which—if done properly—will establish the practicality of the language, and which is necessary for a full presentation.49

For the time being, mathematical discourse and working programs were different sorts of things, whatever the imperatives of theory or the long-term aspirations of artificial intelligence.

The difference is evident in McCarthy's two major papers. ‘Basis’ is about a mathematical theory of computation that enables one to talk about programs as mathematical objects, analyzing them and proving things about them without reference to any particular machine.50 ‘Towards’ concerns machines with state vectors and the changes wrought on them by storage, with or without side effects. Peter Landin’s applicative expressions in whatever notation similarly served mathematical purposes, while the SECD machine occupied the middle ground between proofs and working code. One could say a lot about the mathematics of programs without touching the problem of assignment, but one could not write compilers for Algol or CPL, and a fortiori one could not write a semantically correct compiler-compiler.

Hence, the work stimulated by McCarthy and Landin followed two lines of development during the late '60s. One line probed the power and limits of recursion induction and other forms of reasoning about programs. Investigations in this area struck links with other approaches to verifying programs. The second line concerned itself with the mathematical problem of instantiating abstract systems in the concrete architecture of the stored-program computer. The two lines converged on a common mathematical foundation brought out by the work of Scott and subsequently pursued in the denotational semantics of Scott and Strachey and in a new algebraic approach proposed by Burstall and Landin.

Computer science as pure and applied algebra

From the start, McCarthy had looked to formal semantics not only to give definiteness to the specification of languages but also, and perhaps more importantly, to provide the basis for proving equivalences among programs and for demonstrating that a program will work as specified. While subsequent work considerably furthered the first goal and revealed the complexity of the programming languages being designed and implemented, it continued to speak a creole of formal logic and Algol. In the later 1960s, Burstall and Landin began to consider how the enterprise might be couched more directly in mathematical terms. As they wrote in a joint article published in 1969,

Programming is essentially about certain 'data structures' and functions between them. Algebra is essentially about certain 'algebraic structures' and functions between them. Starting with such familiar algebraic structures as groups and rings algebraists have developed a wider notion of algebraic structure (or 'algebra') which includes these as examples and also includes many of the entities which in the computer context are thought of as data structures.51

Working largely from Paul M. Cohn's Universal Algebra (1965), they expressed functions on data structures as homomorphisms of Ω-algebras, linking them via semigroups to automata theory and thereby extending to operations of higher arity on data structures the conclusion reached by J. Richard Büchi a few years earlier:

If the definition of ‘finite automaton’ is appropriately chosen, it turns out that all the basic concepts and results concerning structure and behavior of finite automata are in fact just special cases of the fundamental concepts (homomorphism, congruence relation, free algebra) and facts of abstract algebra. Automata theory is simply the theory of universal algebras (in the sense of Birkhoff) with unary operations, and with emphasis on finite algebras.52

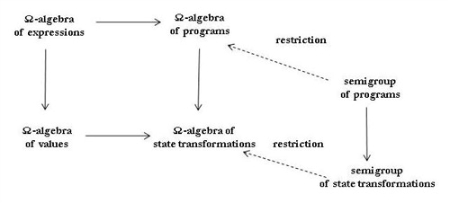

By way of examples, Burstall and Landin set out a formulation of list-processing in terms of homomorphisms and a proof of the correctness of a simpler compiler similar to the one treated by McCarthy and Painter by means of recursion induction. Their approach can perhaps be summed up by one of their diagrams.53

Category theory did not lie far beyond Ω-algebras and over the course of the 1970s became the language for talking about the typed λ-calculus, as functional programming in turn became in Burstall’s terms a form of ‘electronic category theory’.54

As automata theory, formal languages, and formal semantics took mathematical form at the turn of the 1970s, the applications of abstract algebra to computers and computation began to feed back into mathematics as new fields in themselves. In 1973 Cohn presented a lecture on ‘Algebra and Language Theory’, which later appeared as Chapter XI of the second edition of his Universal Algebra. There, in presenting the main results of Schützenberger’s and Chomsky’s algebraic theory, he spoke of the ‘vigorous interaction [of mathematical models of languages] with some parts of noncommutative algebra, with benefit to ‘mathematical linguist’ and algebraist alike.’55 A few years earlier, as Birkhoff was publishing his new textbook with Bartee in 1969, he also wrote an article reviewing the role of algebra in the development of computer science. There he spoke of what he had learned as a mathematician from the problems of minimization and optimization arising from the analysis and design of circuits and from similar problems posed by the optimization of error-correcting binary codes. Together,

[these] two unsolved problems in binary algebra ... illustrate the fact that genuine applications can suggest simple and natural but extremely difficult problems, which are overlooked by pure theorists. Thus, while working for 30 years (1935–1965) on generalizing Boolean algebra to lattice theory, I regarded finite Boolean algebras as trivial because they could all be described up to isomorphism, and completely ignored the basic ‘shortest form’ and ‘optimal packing’ problems described above.56

Earlier in the article, Birkhoff had pointed to other ways in which ‘the problems of computing are influencing algebra’. To make the point, he compared the current situation with the Greek agenda of ‘rationalizing geometry through constructions with ruler and compass (as analog computers)’.

By considering such constructions and their optimization in depth, they were led to the existence of irrational numbers, and to the problems of constructing regular polygons, trisecting angles, duplicating cubes, and squaring circles. These problems, though of minor technological significance, profoundly influenced the development of number theory.

I think that our understanding of the potentialities and limitations of algebraic symbol manipulation will be similarly deepened by attempts to solve problems of optimization and computational complexity arising from digital computing.

Birkhoff's judgment, rendered at just about the time that theoretical computer science was assigned its own main heading in Mathematical Reviews, points to just one way in which computer science was opening up a previously unknown realm of mathematics lying between the finite and the infinite, namely the finite but intractably large. Computational complexity was another.

In 1974, acting in Scott’s words as ‘the Euclid of automata theory’, Samuel Eilenberg undertook to place automata and formal languages on a common mathematical foundation underlying the specific interests that had motivated them. ‘It appeared to me,’ he wrote in the preface of his intended four-volume Automata, Languages, and Machines,

that the time is ripe to try and give the subject a coherent mathematical presentation that will bring out its intrinsic aesthetic qualities and bring to the surface many deep results which merit becoming part of mathematics, regardless of any external motivation.57

Yet, in becoming part of mathematics the results would retain the mark characteristic of their origins. All of Eilenberg's proofs were constructive in the sense of constituting algorithms.

A statement asserting that something exists is of no interest unless it is accompanied by an algorithm (i.e., an explicit or effective procedure) for producing this ‘something’.

In addition, Eilenberg held back from the full generality to which abstract mathematicians usually aspired. Aiming at a ‘restructuring of the material along lines normally practiced in algebra’, he sought to reinforce the original motivations rather than to eradicate them, arguing that both mathematics and computer science would benefit from his approach.

To the pure mathematician, I tried to reveal a body of new algebra, which, despite its external motivation (or perhaps because of it) contains methods and results that are deep and elegant. I believe that eventually some of them will be regarded as a standard part of algebra. To the computer scientist I tried to show a correct conceptual setting for many of the facts known to him (and some new ones). This should help him to obtain a better and sharper mathematical perspective on the theoretical aspects of his researches.

Coming from a member of Bourbaki, who insisted on the purity of mathematics, Eilenberg's statement is all the more striking in its recognition of the applied origins of ‘deep and elegant’ mathematical results.

In short, in the formation of theoretical computer science as a mathematical discipline the traffic traveled both ways. Mathematics provided the structures on which a useful and deep theory of computation could be erected. In turn, theoretical computer science gave ‘physical’ meaning to some of the most abstract, ‘useless’ concepts of modern mathematics: semigroups, lattices, Ω-algebras, categories. In doing so, it motivated their further analysis as mathematical entities, bringing out unexpected properties and relationships among them. It is important for the historian to record this interaction before those entities are ‘naturalized’ into the axiomatic presentation of the field, as if they had always been there or had appeared out of thin air.

The structures of computation and the structures of the world

As noted earlier, in seeking a theory for the new electronic digital computer, John von Neumann took his cue from biology and neurophysiology. He thought of automata as artificial organisms and asked how they might be designed to behave like natural organisms. His program split into two lines of research: finite automata and growing automata as represented by cellular automata. By the late 1960s, the first field had expanded into mathematically elegant theory of automata and formal languages resting firmly on algebraic foundations. At this point, the direction of inspiration reversed itself. In the late 1960s, Aristid Lindenmayer turned to the theory of formal languages for models of cellular development58 Although he couched his analysis in terms of sequential machines, it was soon reformulated in terms of formal languages, in particular context-free grammars. His L-systems, which soon became a field of study themselves, were among the first applications of theoretical computer science and its tools to the biological sciences, a relationship reinforced by the resurgence in the 1980s of cellular automata as models of complex adaptive systems. During the 1990s theoretical chemist Walter Fontana used the λ-calculus to model the self-organization and evolution of biological molecules. Most recently the π-calculus, initially designed by Robin Milner by analogy to the λ-calculus for defining the syntax and operational semantics of concurrent processes, has become the basis for analyzing the dynamics of the living cell.59 As the introduction to a recent volume describes the relationship,

The challenge of the 21st century will be to understand how these [macromolecular] components [of cellular systems] integrate into complex systems and the function and evolution of these systems, thus scaling up from molecular biology to systems biology. By combining experimental data with advanced formal theories from computer science, ‘the formal language for biological systems’ to specify dynamic models of interacting molecular entities would be essential for:

(I) understanding the normal behaviour of cellular processes, and how changes may affect the processes and cause disease ...;

(ii) providing predictability and flexibility to academic, pharmaceutical, biotechnology and medical researchers studying gene or protein functions.60

Here the artifact as formal (mathematical) system has become deeply embedded in the natural world, and it is not clear how one would go about re-establishing traditional epistemological boundaries among the elements of our understanding.

Mathematics and the world

Over the past three decades, computational models have become an essential tool of scientific inquiry. They provide our only access, both empirically and intellectually, to the behavior of the complex non-linear systems that constitute the natural world, especially the living world. As Paul Humphrey notes concerning the massive quantities of data that only a computer can handle,

Techological enhancements of our native cognitive abilities are required to process this information, and have become a routine part of scientific life. In all of the cases mentioned thus far, the common feature has been a significant shift of emphasis in the scientific enterprise away from humans because of enhancements without which modern science would be impossible. ... [I]n extending ourselves, scientific epistemology is no longer human epistemology.61

In other words, our understanding of nature has come to depend on our understanding of the artifact that implements those models, an artifact that we consider to be essentially mathematical in nature: it is the computation that counts, not the computer that carries it out. Thus, our epistemology retains a human aspect to the extent that mathematics affords us conceptual access to the computational processes that enact our representations of the world. And that remains an open problem.

In 1962, looking ahead toward a mathematical theory of computation and back to the standard of a mathematical science set by Newton, John McCarthy sought to place computing in the scientific mainstream:

It is reasonable to hope that the relationship between computation and mathematical logic will be as fruitful in the next century as that between analysis and physics in the last. The development of this relationship demands a concern for both applications and for mathematical elegance.62

The relationship between analysis and physics in the 19th century had borne more than theoretical fruit. It had been the basis of new technologies that transformed society and indeed nature. Implicit in McCarthy’s exhortation, then, was a mathematical science of computation that worked in the world in the way that mathematical physics had done. McCarthy had in mind, of course, artificial intelligence and its practical applications.

During the 1970s the new sciences of complexity changed mathematical physics. In 1984 Stephen Wolfram similarly looked to past models for a mathematical science of a decidedly non-Newtonian world. In laying out the computational theory of cellular automata, he noted that:

Computation and formal language theory may in general be expected to play a role in the theory of non-equilibrium and self-organizing systems analogous to the role of information theory in conventional statistical mechanics.63

Table one, derived from his article, reflects what he had in mind.

Cellular Automata and Formal Languages

Qualitative characterizations of complexity |

Patterns generated by evolution from initial configuration |

Effect of small changes in initial configurations |

‘Information’ of initial state propagates |

Formal Language representing limit set | |

|---|---|---|---|---|---|

| 1 | Tends toward a spatially homogeneous state | Pattern disappears with time | No change in final state | Finite distance | Regular |

| 2 | Yields a sequence of simple stable or periodic structures | Pattern evolves to a fixed finite size | Changes only in a region of finite size | Finite distance | Regular |

| 3 | Exhibits chaotic aperiodic behavior | Pattern grows indefinitely at a fixed rate | Changes over a region of ever-increasing size | Infinite distance at fixed positive speed | Context sensitive? |

| 4 | Yields complicated localized structures, some propagating | Pattern grows and contracts with time | Irregular changes | Infinite distance | Universal |

Stephen Wolfram, ‘Computation Theory of Cellular Automata’, Communications in Mathematical Physics 96 (1984)

But with the power of computational modeling came a new challenge to mathematics. Traditional models enhance understanding by mapping the operative parameters of a phenomenon into the elements of an analyzable mathematical structure, e.g. the mass, position, and momentum of a planet related by a differential equation. Computational models, in particular computer simulations, render both the mapping and the dynamics of the structure opaque to analysis. While the program may help to discern the relation between the structure of the model and that of the phenomenon, the process determined by that program remains all but impenetrable to our understanding. As Christopher Langton observed,

We need to separate the notion of a formal specification of a machine—that is, a specification of the logical structure of the machine—from the notion of a formal specification of a machine's behavior—that is, a specification of the sequence of transitions that the machine will undergo. In general, we cannot derive behaviours from structure, nor can we derive structure from behaviours.64

In the concluding chapter of Hidden Order: How Adaptation Builds Complexity, Holland makes clear what is lost thereby. Looking ‘Toward Theory’ and ‘the general principles that will deepen our understanding of all complex adaptive systems [cas]‘,he insists as a point of departure that:

Mathematics is our sine qua non on this part of the journey. Fortunately, we need not delve into the details to describe the form of the mathematics and what it can contribute; the details will probably change anyhow, as we close in on our destination. Mathematics has a critical role because it along enables us to formulate rigorous generalizations, or principles. Neither physical experiments nor computer-based experiments, on their own, can provide such generalizations. Physical experiments usually are limited to supplying input and constraints for rigorous models, because the experiments themselves are rarely described in a language that permits deductive exploration. Computer-based experiments have rigorous descriptions, but they deal only in specifics. A well-designed mathematical model, on the other hand, generalizes the particulars revealed by physical experiments, computer-based models, and interdisciplinary comparisons. Furthermore, the tools of mathematics provide rigorous derivations and predictions applicable to all cas. Only mathematics can take us the full distance.65

In the absence of mathematical structures that allow abstraction and generalization, computational models do not say much. Nor do they function as models traditionally have done in providing an understanding of nature on the basis of which we can test our knowledge by making things happen in the world.

In pointing to the need for a mathematics of complex adaptive systems, Holland was expressing a need as yet unmet. The mathematics in question ‘[will have to] depart from traditional approaches to emphasize persistent features of the far-from-equilibrium evolutionary trajectories generated by recombination.’66 His sketch of the specific form the mathematics might take suggests that it will depart from traditional approaches along branches rather than across chasms, and that it will be algebraic. But it has not yet been created. If and when it does emerge, it is likely to confirm Schützenberger’s principle that ‘it is again a characteristic of the axiomatic method that it is at the same time always a new chapter in mathematics.’67

References

1 Birkhoff modestly omits mention here of his textbook, A Survey of Modern Algebra, written jointly with Saunders MacLane, which played a major role in that development. How and why the shift came about is, of course, another question of considerable historical interest; see Leo Corry, Modern Algebra and the Rise of Mathematical Structures (Basel; Boston; Berlin: Birkhäuser, 1996, 2nd. ed. 2004).

2 Garrett Birkhoff and Thomas C. Bartee, Modern Applied Algebra (New York: McGraw-Hill Book Company, 1970), Preface, v. A preliminary edition appeared in 1967.

3 On the computer as a ‘defining technology’, see J. David Bolter, Turing's Man (Chapel Hill: UNC Press, 1984).

4 ‘Software as Science—Science as Software’, in Ulf Hashagen, Reinhard Keil-Slawik, and Arthur Norberg (eds.) History of Computing: Software Issues (Berlin: Springer Verlag, 2002), 25-48.

5 See, in addition to the usual suspects, the illuminating argument for a material epistemology of science by Davis Baird, Thing Knowledge (Berkeley: U. California Pr., 2004). Paul Humphreys offers a counterpart for computational science in Extending Ourselves: Computational Science, Empiricism, and Scientific Method (Oxford: Oxford U.P., 2004).

6 Francis Bacon, The New Organon [London, 1620], trans. James Spedding, Robert L. Ellis, and Douglas D. Heath in the The Works, vol. VIII (Boston: Taggart and Thompson, 1863; repr. Indianapolis: Bobbs-Merrill, 1960) Aph. 3.

7 On the last, see Ehud Shapiro’s contribution to the conference, ‘What is a Computer? The World is Not Enough to Answer’, describing computers made of biological molecules.

8 In recent articles and interviews arguing for placing computer science on a par with the natural sciences , Peter Denning points to recent ‘discoveries’ that nature is based on information processes.

9 Murray Gell-Mann and Yuval Ne'eman, The Eightfold Way (NY; Amsterdam: W.A. Benjamin, 1964).

10 Eugene P. Wigner, ‘The Unreasonable Effectiveness of Mathematics in the Natural Sciences,’ Communications in Pure and Applied Mathematics 13, I (1960), Cf. Mark Steiner, The Applicability of Mathematics as a Philosophical Problem(Cambridge, MA: Harvard UP, 1998).

11 On the seventeenth-century origins of the structural notion of algebra, see Michael S. Mahoney, ‘ The Beginnings of Algebraic Thought in the Seventeenth Century‘ , in S. Gaukroger (ed.), Descartes: Philosophy, Mathematics and Physics (Sussex: The Harvester Press/Totowa, NJ: Barnes and Noble Books, 1980), Chap.5. For subsequent developments, see the book by Corry cited above.

12 Herbert Mehrtens, Moderne Sprache Mathematik: eine Geschichte des Streits um die Grundlagen der Disziplin und des Subjekts formaler Systeme (Frankfurt am Main : Suhrkamp, 1990). For a more nuanced analysis of Hilbert's thinking on the relation of mathematics to the physical world, see Leo Corry, David Hilbert and the Axiomatization of Physics (1898–1918): From Grundlagen der Geometrie to Grundlagen der Physik (Dordrecht/Boston/London: Kluwer Academic Publishers, 2004.

13 Bruna Ingrao and Giorgio Israel, The Invisible Hand: Economic Equilibrium in the History of Science (trans. Ian McGilvray, Cambridge, MA: MIT Press, 1990), 186-87.

14 M. P. Schützenberger, ‘A propos de la “cybernétique”’, Evolution psychiatrique 20,4(1949), 595, 598.

15 For an overview of von Neumann's work in the first area, see William Aspray, John von Neumann and the Origins of Modern Computing (Cambridge, MA: MIT Press, 1990), Chapter 5, ‘The Transformation of Numerical Analysis’.

16 John von Neumann, ‘The General and Logical Theory of Automata’, Cerebral Mechanisms in Behavior, (Hixon Symposium Hafner Publishing, 1951), 1-41

17 See in particular ‘ Computer Science: The Search for a Mathematical Theory ‘, in John Krige and Dominique Pestre (eds.), Science in the 20th Century (Amsterdam: Harwood Academic Publishers, 1997), Chap. 31, and ‘ What Was the Question? The Origins of the Theory of Computation ‘, in Using History to Teach Computer Science and Related Disciplines (Selected Papers from a Workshop Sponsored by Computing Research Association with Funding from the National Science Foundation) ed. Atsushi Akera and William Aspray (Washington, DC: Computing Research Association, 2004), 225-232.

18 For all the public fervor surrounding Andrew Wiles's proof of Fermat's ‘last theorem’, it was the new areas of investigation suggested by his solution of the Taniyama conjecture that excited his fellow practitioners.

19 Huffman, Moore, McCulloch, Pitts, JvN

20 John McCarthy and Claude E. Shannon, Automata Studies (Princeton: Princeton University Press, 1956).

21 Michael Rabin and Dana Scott, ‘Finite Automata and Their Decision Problems’, IBM Journal of Research and Development 3, 2 (1959), 114

22 Stephen C. Kleene, ‘Representation of Events in Nerve Nets and Finite Automata’, in Automata Studies, ed. C.E. Shannon and J. McCarthy (Princeton, 1956), 37.

23 George A. Miller dates the beginnings of cognitive science from this symposium, which included a presentation by Allen Newell and Herbert Simon of their ‘Logic Theory Machine,’ Noam Chomsky's ‘Three Models for the Description of Language,’ and Miller's own ‘Human Memory and the Storage of Information’. Ironically, for Chomsky and Miller, the symposium marked a turn away from information theory as the model for their work.

24 M.P. Schützenberger, ‘Un problème de la théorie des automates,‘ Seminaire Dubreil-Pisot 13(1959/60), no 3 (23 November 1959); ‘On the definition of a family of automata,’ Information and Control 4(1961), 245-70; ‘Certain elementary families of automata,’ in Mathematical Theory of Automata ed. Jerome Fox (Brooklyn, NY, 1963), 139-153.

25 In 1955 Chomsky began circulating the manuscript of his Logical Structure of Linguistic Theory, but MIT's Technology Press hesitated to publish such a radically new approach to the field before Chomsky had exposed it to critical examination through articles in professional journals. A pared-down version of the work appeared as Syntactic Structures in 1957, and a partially revised version of the whole, with a retrospective introduction, in 1975 (New York: Plenum Press).

26 Noam Chomsky, ‘Three models of language’, IRE Transactions in Information Theory 2,3(1956), 113-24; at 113.

27 Noam Chomsky, ‘On certain formal properties of grammars’, Information and Control 2,2(1959), 137-167.

28 Paul C. Rosenbloom, The Elements of Mathematical Logic (New York: Dover Publications, 1950; repr. 2005). In a review in the Journal of Symbolic Logic 18(1953), 277-280, Martin Davis drew particular attention to Rosenbloom’s inclusion of combinatory logics and Post canonical systems, joining the author in hoping the book would make Post’s work more widely known. To judge from citations, Rosenbloom’s text was an important resource for many of the people discussed here. For example, it appears that Peter Landin and Dana Scott first learned of Church’s λ-calculus through it.

29 M. P. Schützenberger, ‘Some remarks on Chomsky’s context-free languages’, Quarterly Progress Report, (MIT) Research Laboratory for Electronics 63(10/15/61), 155-170.

30 Seymour Ginsburg and H. Gordon Rice, ‘Two families of languages related to ALGOL’, Journal of the ACM 9(1962), 350-371.

31 J.W. Backus, ‘The syntax and semantics of the proposed international algebraic language of the Zurich ACM-GAMM Conference’, Proceedings of the International Conference on Information Processing (Paris: UNESCO, 1959, 125-132. Backus later pointed to Post’s production systems as the model for what he referred to here as metalinguistic formulae.

32 Alfred Tarski, ‘A lattice-theoretical fixpoint theorem and its applications‘, Pacific Journal of Mathematics 5(1955), 285-309.

33 Samelson and Bauer, ‘Sequentielle Formelübersetzung’, Elektronische Rechenanlagen 1(1959), 176-82; ‘Sequential Formula Translation’, Comm.ACM 3,2(1960), 76-83.

34 Noam Chomsky, ‘Context-free grammars and pushdown storage‘, Quarterly Progress Report, (MIT) Research Laboratory for Electronics 65(4/15/62), 187-194.

35 Noam Chomsky and M.P. Schützenberger, ‘The algebraic theory of context-free languages‘, in Computer Programming and Formal Systems, ed. P. Braffort and D. Hirschberg (Amsterdam, 1963). Originally prepared for an IBM Seminar in Blaricum, Netherlands, in the summer of '61, the published version took account of intervening results, in particular the introduction of the pushdown store.

36 Dominique Perrin, ‘Les débuts de la théorie des automates’, Technique et science informatique 14(1995), 409-43.

37 Michael O. Rabin, ‘Lectures on Classical and Probabilistic Automata’, in E.A. Caianiello (ed.), Automata Theory (New York: Academic Press, 1966), 306.

38 John McCarthy, ‘Recursive Functions of Symbolic Expressions and their Computation by Machine, Part 1’, Communications of the ACM 3,4(1960), 184-195; ‘A Basis for a Mathematical Theory of Computation’, Proceedings of the Western Joint Computer Conference (New York: NJCC, 1961), 225-238; republished with an addendum ‘On the Relations between Computation and Mathematical Logic’ in Computer Programming and Formal Systems, ed. P. Braffort and D. Hirschberg (Amsterdam: North-Holland, 1963), 33-70; ‘Towards a Mathematical Science of Computation’, Proceedings IFIP Congress 62, ed. C.M. Popplewell (Amsterdam: North-Holland, 1963), 21-28

39 McCarthy cited Church's Calculi of Lambda Conversion (Princeton, 1941) but did not name Curry nor give a source for his combinatory logic.

40 McCarthy, ‘Basis’ (1961), 225.

41 McCarthy, ‘Towards’, 21.

42 As Anil Nerode pointed out in Mathematical Reviews 26(1963), #5766.

43 P.J. Landin, ‘A λ-calculus approach’, in L. Fox (ed.) Advances in Programming and Non-Numerical Computing (Oxford: Pergamon Press, 1966), Chap. 5, p.97. The main body of the lectures had already appeared as ‘The mechanical evaluation of expressions’, Computer Journal 6(1964), 308-320.

44 In ‘The Next 700 Programming Languages’, Communications of the ACM 9,3(1966), 157-166, Landin expanded the notation into a ‘family of languages’ called ISWIM (If you See What I Mean), which he noted could be called ‘Church without lambda’. On CPL, see D.W. Barron, et al., ‘The main features of CPL’, Computer Journal 6(1964), 134-142.

45 Christopher Strachey, ‘Towards a Formal Semantics’, Formal Language Description Languages for Computer Programming, ed. T.B. Steel, Jr. (Amsterdam: North-Holland, 1966), 198-216.

46 That is, at each step of a computation, σ is the set of pairs (α, β) relating L-values to R-values, or the current map of the store.

47 Dana Scott, ‘Outline of a mathematical theory of computation’, Technical Monograph PRG-2, Oxford University Computing Laboratory, November 1970, 4; an earlier version appeared in the Proceedings of the Fourth Annual Princeton Conference on Information Sciences and Systems, 1970, 169-176.

48 ‘Semantics of assignment’, Machine Intelligence 2(1968), 3-20; at 3.

49 ‘Outline of a mathematical theory of computation’, Princeton version, 169.

50 In ‘Recursive Functions’, McCarthy used an abstract LISP machine, leaving the question of its implementation to a concluding and independent section.

51 R.M. Burstall and P.J. Landin, ‘Programs and their proofs, an algebraic approach’, Machine Intelligence 4(1969), 17.

52 Cf. J. Richard Büchi, ‘Algebraic Theory of Feedback in Discrete Systems, Part I,’ in Automata Theory, ed. E.R. Caianello (New York, 1966), 71. The reference is to Birkhoff’s Lattice Theory (New York, 1948).

53 Burstall and Landin, 32 (redrawn).

54 Rod Burstall, ‘Electronic Category Theory’, Mathematical Foundations of Computer Science 1980 [Proceedings of the Ninth International Symposium - Springer LNCS 88], (Berlin: Springer Verlag, 1980), 22-39.

55 Paul M. Cohn, Universal Algebra, 2 ed. (Dordrecht: Reidel, 1981), 345. The chapter was reprinted from the Bulletin of the London Mathematical Society 7(1975), 1-29.

56 Garrett Birkhoff, ‘The Role of Modern Algebra in Computing’, Computers in Algebra in Number Theory (American Mathematical Society, 1971), 1-47, repr. in his Selected Papers on Algebra and Topology (Boston: Birkhäuser, 1987), 513559; at 517; emphasis in the original.

57 Automata, Languages, and Machines(2 vols., NY: Columbia University Press, 1974), Vol. A, xiii.

58 Aristid Lindenmayer, ‘Mathematical Models for Cellular Interactions in Development’, Journal of Theoretical Biology 18(1968). For subsequent development of the field, see Lindenmayer and Grzegorz Rozenberg (eds.), Automata, Languages, and Development (Amsterdam: North-Holland, 1976) and Rozenberg and Arto Salomaa, The Book of L (Berlin: Springer-Verlag, 1986).

59 On the λ-calculus, see e.g. W. Fontana and L.W. Buss, ‘The Barrier of Objects: From Dynamical Systems to Bounded Organizations’, in: Boundaries and Barriers, ed. J.Casti and A.Karlqvist (New York: Addison-Wesley, 1996), 56–116; on the ð-calculus, see A. Regev, W. Silverman, and E. Shapiro, ‘Representation and simulation of biochemical processes using the ð-calculus process algebra’, in Pacific Symposium on Biocomputing 6(2001), 459-470, and Regev and Shapiro, ‘Cellular abstractions: Cells as computation’, Nature 419(2002), 343.

60 Corrado Priama, ‘Preface’ to Computational Methods in Systems Biology, Proceeding of the First International Workshop, Rovereto Italy, February 24-26 2003, LNCS 2602 (Berlin: Springer, 2003).

61 Paul Humphreys, Extending Ourselves: Computational Science, Empiricism, and Scientific Method (Oxford: Oxford U.P., 2004), 8

62 McCarthy, ‘Basis’, 69.

63 Stephen Wolfram, ‘Computation Theory of Cellular Automata’, Communications in Mathematical Physics 96(1984), 1557; at 16. Repr. in his Theory and Applications of Cellular Automata (Singapore: World Scientific, 1986), 189-231 and his Cellular Automata and Complexity: Collected Papers (Reading: Addison Wesley, 1994), 159-202.

64 Christopher G. Langton, ‘Artificial Life’ [1989] in Margaret A. Boden (ed.), The Philosophy of Artifical Life (Oxford: Oxford University Press, 1996), 47.

65 John H. Holland, Hidden Order: How Adaptation Builds Complexity (Reading, MA: Addison-Wesley, 1995)161-2.

66 Ibid. 171-2.

67 Schützenberger, ‘A propos de la “cybernétique”’ (above, n.14), 598.

Illustration credits

- 1 Courtesy of Princeton University History Department

- 2 Source: School of Mathematics and Statistics, University of St Andrews, Scotland, http://www-history.mcs.st-andrews.ac.uk/PictDisplay/Birkhoff_Garrett.html

- 3 Source: Collections of the University of Pennsylvania Archive

- 4 Source: http://www-personal.umich.edu/~natpoor/eniac/im/

- 5 Source: School of Mathematics and Statistics, University of St Andrews, Scotland, http://www-history.mcs.st-andrews.ac.uk/Mathematicians/Schutzenberger.html

- 6 Screenshot of an interview from the early 1960s

- 7 Source: http://www.flickr.com/photos/null0/, under license CC BY 2.0

- 8 Source: Wikimedia Commons, http://en.wikipedia.org/wiki/File:Alonzo_Church.jpg

- 9 Photo by Andrej Bauer